Publications

2024

Can Generative Models Improve Self-Supervised Representation Learning?

AAAI 2025

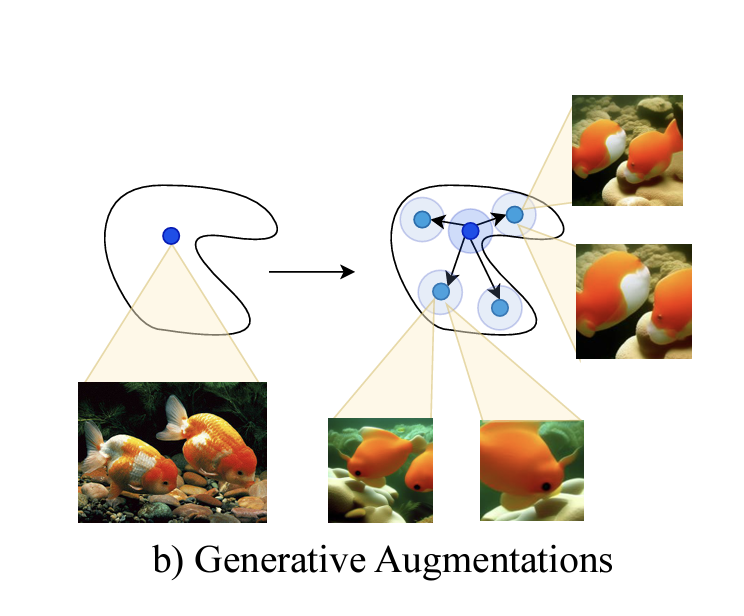

The rapid advancement in self-supervised learning (SSL) has highlighted its potential to leverage unlabeled data for learning rich visual representations. However, the existing SSL techniques, particularly those employing different augmentations of the same image, often rely on a limited set of simple transformations that are not representative of real-world data variations. This constrains the diversity and quality of samples, which leads to sub-optimal representations. In this paper, we introduce a novel framework that enriches the SSL paradigm by utilizing generative models to produce semantically consistent image augmentations. By directly conditioning generative models on a source image representation, our method enables the generation of diverse augmentations while maintaining the semantics of the source image, thus offering a richer set of data for self-supervised learning. Our extensive experimental results on various SSL methods demonstrate that our framework significantly enhances the quality of learned visual representations by up to 10\% Top-1 accuracy in downstream tasks. This research demonstrates that incorporating generative models into the SSL workflow opens new avenues for exploring the potential of synthetic data. This development paves the way for more robust and versatile representation learning techniques.PolyOculus: Simultaneous Multi-view Image-based Novel View Synthesis

European Conference on Computer Vision (ECCV) 2024

This paper considers the problem of generative novel view synthesis (GNVS), generating novel, plausible views of a scene given a limited number of known views. Here, we propose a set-based generative model that can simultaneously generate multiple, self-consistent new views, conditioned on any number of views. Our approach is not limited to generating a single image at a time and can condition on a variable number of views. As a result, when generating a large number of views, our method is not restricted to a low-order autoregressive generation approach and is better able to maintain generated image quality over large sets of images. We evaluate our model on standard NVS datasets and show that it outperforms the state-of-the-art image-based GNVS baselines. Further, we show that the model is capable of generating sets of views that have no natural sequential ordering, like loops and binocular trajectories, and significantly outperforms other methods on such tasks.Long-Term Photometric Consistent Novel View Synthesis with Diffusion Models

International Conference on Computer Vision (ICCV) 2023

Novel view synthesis from a single input image is a challenging task, where the goal is to generate a new view of a scene from a desired camera pose that may be separated by a large motion. The highly uncertain nature of this synthesis task due to unobserved elements within the scene (i.e., occlusion) and outside the field-of-view makes the use of generative models appealing to capture the variety of possible outputs. In this paper, we propose a novel generative model which is capable of producing a sequence of photorealistic images consistent with a specified sequence and a single starting image. Our approach is centred on an autoregressive conditional diffusion-based model capable of interpolating visible scene elements and extrapolating unobserved regions in a view and geometry consistent manner. Conditioning is limited to an image capturing a single camera view and the (relative) pose of the new camera view. To measure the consistency over a sequence of generated views, we introduce a new metric, the thresholded symmetric epipolar distance (TSED), to measure the number of consistent frame pairs in a sequence. While previous methods have been shown to produce high quality images and consistent semantics across pairs of views, we show empirically with our metric that they are often in consistent with the desired camera poses. In contrast, we demonstrate that our method produces both photorealistic and view-consistent imagery.